Ethics and NDE 4.0

Ripi Singh & Tracie Clifford

Sound of an Alarm

There are too many unknowns around explosive growth of Industry 4.0 technology applications, that it needs a hard conversation around ethics. The situation is particularly alarming as decision making shifts from humans to autonomous machines that can learn action but incapable of fear of penalty.

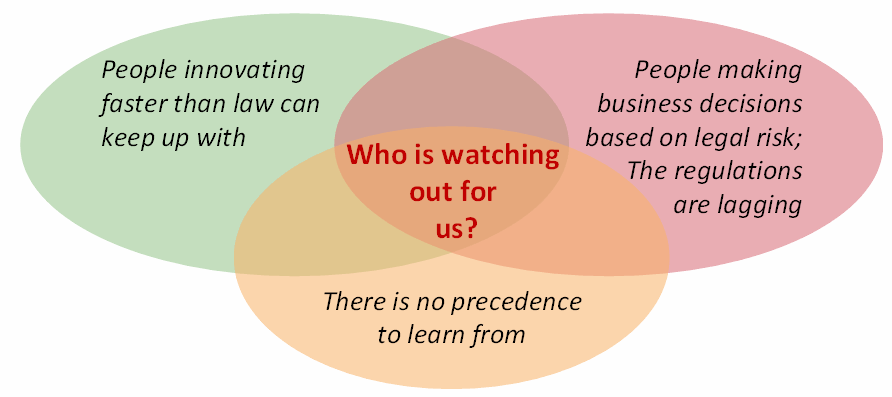

One of the aspects of any revolution is that innovation happens faster than laws and regulations needed to keep the business drivers in check, and particularly where there is no precedence to provide initial guidance. The graphic below depicts that dangerous zone, where on one is looking for general public.

What is Ethics?

The field of ethics[1] (or moral philosophy) involves systematizing, defending, and recommending concepts of right and wrong behavior. Philosophers today usually divide ethical theories into three general subject areas: metaethics, normative ethics, and applied ethics.

Metaethics focus on the issues of universal truths, the will of God, the role of reason in ethical judgments, and the meaning of ethical terms themselves.

Normative ethics involve articulating the good habits that we should acquire, the duties that we should follow, or the consequences of our behavior on others.

Applied ethics involves examining specific controversial issues, such as abortion, infanticide, animal rights, environmental concerns, homosexuality, capital punishment, or nuclear war.

The topic of NDE 4.0 relates closely to Normative Ethics.

Proposed Ethical Principles for AI in NDE 4.0

The U.S. Department of Defense officially adopted a series of ethical principles[2] for the use of Artificial Intelligence on Feb 24, 2020 following recommendations provided to Secretary of Defense Dr. Mark T. Esper by the Defense Innovation Board in October 2019. These principles encompass five major areas: Responsibility, Equity, Traceability, Reliability, and Governance. Those recommendations came after 15 months of consultation with leading AI experts in commercial industry, government, academia and the American public that resulted in a rigorous process of feedback and analysis among the nation’s leading AI experts with multiple venues for public input and comment. We can adopt those to the NDE sector.

The adoption of AI ethical principles should align with the organizations growth, NDE digitalization strategy, and the lawful use of AI systems in host state/country. The adoption of AI ethical principles will enhance the organization’s commitment to upholding the highest ethical standards, while embracing the strong history of applying rigorous testing and validation of inspection technology innovations and methods. The proposed AI ethical principles should build on existing ethics framework in use within the organization and longstanding norms and values.

While the existing ethical frameworks provide a technology-neutral and enduring foundation for ethical behavior, the use of AI raises new ethical ambiguities and risks. These principles address these new challenges and ensure the responsible use of AI by the organization.

These principles will apply to both inspection and non-inspection functions and assist the organization in upholding legal, ethical and policy commitments in the field of AI.

The AI ethical principles encompass five major areas adopted from DoD and sixth one data specific to NDE data here:

Responsibility. NDE personnel will exercise appropriate levels of judgment and care, while remaining responsible for the development, deployment, and use of AI in NDE capabilities. [Notes: such as ASNT Level 3 is responsible for requirements, validation, and training, whereas a Level 2 can deploy it on qualified techniques]

Equity: The digital inspection system developers will take deliberate steps to minimize unintended bias in AI based NDE capabilities. [Notes: This includes development and training data. Training data should come from diverse users and decision makers, as supported by DEI expert. Refer to the section on bias for further details]

Traceability: The AI capabilities will be developed and deployed such that relevant NDE personnel possess an appropriate understanding of the inspection technology, development processes, and operational methods applicable to AI capabilities, including with transparent and auditable NDE methodologies, data sources, inspection procedure and documentation.

Reliability: The AI capabilities will have explicit, well-defined uses, and the safety, security, and effectiveness of such capabilities will be subject to calibration, validation, and POD assessment within those defined uses across their entire life cycles.

Governance: The digital inspection system developers will design and engineer AI capabilities to fulfill their intended functions while possessing the ability to identify and avoid unintended consequences, and the ability to disengage or deactivate deployed systems that demonstrate unintended behavior.

Data Management: The NDE personnel will honor the data acquisition, transfer, storage, analysis, processing, security, and ownership/sharing rights as determined by organizational policy and contractual obligations.

Call for Action

If you are working on autonomous machines with learning capability, please consider ethics seriously. What we have known and practiced up until now may not be adequate.

If you have an opinion on the content presented above, please engage in a conversation and lets us refine it together.

About Tracie Clifford

Tracie is Quality Manager, ANDE-1 Examination Center, Nondestructive Testing and QA/QC Programs, at Chattanooga State. She has over 30 years of experience in quality assurance and is a champion of training and certification programs in USA. She serves ANSI TAG-176 in development of ISO standards on quality terminology, quality systems, and quality technology.

[1] Internet Encyclopedia of Philosophy.

[2] DoD Adopts Ethical Principles for Artificial Intelligence; US DoD – Feb. 24, 2020.